Games are dynamic systems responding on different types of data inputs to create interesting results for the player. One such source of data is Audio which can at its best help the player have an emotional connection to what’s happening on screen. So with this in mind utilizing audio is many different ways can improve the level of engagement for the player. This short tutorial will explain how to utilize audio data in game to create a basic synthesizer effect giving the sound design a graphical effect for the player.

Setup

The first step is to create an empty game object in a unity scene. On this game object add a line renderer component, setting the options as follows, turn cast shadows off, receive shadows off, motion vectors off, materials set to 1, in parameters set the start width and end width to 0.2, and finally turn world space off. These settings will allows our game object to graphically render our audio data.

Next a material must be created and assigned to the line renderer, Unity recommends utilizing a particle shader for line renderers, as such I use the Particles/Additive shader in my line material. Once the material is created assign it to the game object’s line renderer materials, it will appear on the object once assigned to the component.

Last make sure to assign an Audio Source to the game object, or onto another object in the scene, this will allow us to play audio files which can then be sampled into our script.

This completes the bare bones of our game object next is the creation of the script which will drive the object in the scene, at this point there isn’t much appearing in the scene but that will all change once the script is implemented.

Script

For code cleanliness I’ve split the script tasks into two separate components, one which collects the audio data and another which manipulates the line renderer’s vertex positions. Making these two different components will allow the audio data to manipulate different effects as desired as well as help avoid redundant operations within our game.

Audio Sampler

The Audio Sampler script will contain the audio data sampled during a single frame of the game. In order to accomplish this the script requires an array of float values to store the audio data, this array must be a length of a power of two (i.e. 2 4 8 16 32 ect) since it’s sampling frequency values during a frame. We can use the OnValidate Monobehaviour to ensure our array length value is a power of two with the boolean bitwise operation:

(x > 0 && (x & (x – 1)) == 0)

This statement uses a binary and operation between an int x and x – 1 which is valid for the desired powers of two. The first check on x is only to ensure x is not a negative or zero which would give us erroneous results. Finally above the int field we’ll place the unity Range Attribute [Range(2, 1024)] to further ensure our field cannot be set to any values that might cause problems within the unity editor.

The next field to create is an array to hold our sampled audio data. Again this array will be sized using the previously mentioned int array length value. This array will be initialized in the Monobehaviour Start method to ensure it’s ready to store data on the first Update call.

The script will also require a Fourier Transform Window which is used to prevent samples from leaking, meaning our script would miss some audio data, Unity provides a data type FFTWindow which provides different types of transforms delectable via an enumerated value, the most accurate being Blackman but feel free to play with the different types based on the effect you want your data to drive.

The Update method, which triggers every frame, needs only contain the code to populate our initialized float array. To accomplish this we access a static method in the AudioListener class GetSpectrumData with parameters (float[], int, FFTWindow). This method will take our array and fill it with audio data, based on the audio channel int value and the Fourier Transform provided, then return the array to our AudioSampler.

Finally we’ll create a public method GetAudioData to access the float array data given an int index parameter. Take care to guard against int values larger than the length of your array and smaller than zero to prevent index out of bounds exceptions then return the accessed float value referenced by the validated index.

The complete code for this component is provided below.

public class AudioSampler : MonoBehaviour

{

public static AudioSampler Instance;

[Range(2, 1024)]

int arrayLength = 1024;

public int ArrayLength { get { return arrayLength; } }

float[] samples;

[SerializeField]

FFTWindow fourierTransform = FFTWindow.Triangle;

internal bool IsPowerOfTwo(int x)

{

return (x > 0 && (x & (x - 1)) == 0);

}

void OnValidate()

{

while (!IsPowerOfTwo(arrayLength))

{

arrayLength++;

}

}

void Awake()

{

if (Instance != null && Instance != this)

{

Destroy(gameObject);

return;

}

Instance = this;

}

void Start()

{

samples = new float[arrayLength];

}

void Update()

{

if (samples == null)

return;

AudioListener.GetSpectrumData(

samples,

0,

fourierTransform

);

}

public float GetAudioData(int index)

{

Assert.IsTrue((index < arrayLength && index >= 0));

return samples[index];

}

}

Audio Wave Effect

This component will manipulate the line renderer component on the game object created during the setup section. To accomplish this a reference to that component must first be set as a field in our script in the Start method. Setting this reference as a field is good practice with regards to using Mono since using the GetComponent method is a fairly intensive operation so it’s best to do it as rarely as possible.

After setting a reference to the line renderer next a reference to our AudioSampler script should be established. To get this reference you only need to utilize the singleton design pattern implemented in the class through the Instance public static field. Again set this reference as a field in the class for later use.

Next we need to set the number of vertices for our line, this should be the same number of samples in our samples array in the AudioSampler script so we can match each vertex with a given sample value. Obtain the arrayLength value from the AudioSampler and use it to create an array of Vector3. After the Vector3 array is declared and initialized with the correct length next the vertex positions must be setup. I suggest creating two public Vector3 fields to store the start position and end position of your line with both points being offset along a single axis. You could define these points anywhere in space however for simplicity sake I am going to set mine along the X-axis only. Using these Vector3’s we can calculate the offset our vertices need to set at given the number of samples like so:

float distance = (lStart – lEnd).magnitude / arrayLength;

Then to find the next point in space for a vertex we create a vector from the starting Vector3 to the ending Vector3, normalize it, then scale it using our distance float. The resulting vector allows us to step from one vertex to the next using a simple for loop remembering to store each position in our Vector3 array and adding our offset vector. After the loop is terminated we need only record our lines end point to the Vector3 array at the last index then pass the entire array to the line renderer component like so:

lRender.SetPositions(vArray);

Now our line is setup in script and we have an array containing each vertex so the last task is to code the Update method to manipulate the line based on the audio data. Again for simplicity sake I’m restraining all the vertex manipulations to the Y-axis for this example so that the line will only bend upward. First I declare a Vector3, which will represent the amount of offset for a line vertex, which I initialize to Vector3.zero. The next step is to declare a for loop that will step through all our sample data array then within the body of the loop we set our offset Vector3 Y-component to the value of the sample at that index after which we pass the new vector position to our line renderer like so:

lRender.SetPosition(index, vArray[index] + offset);

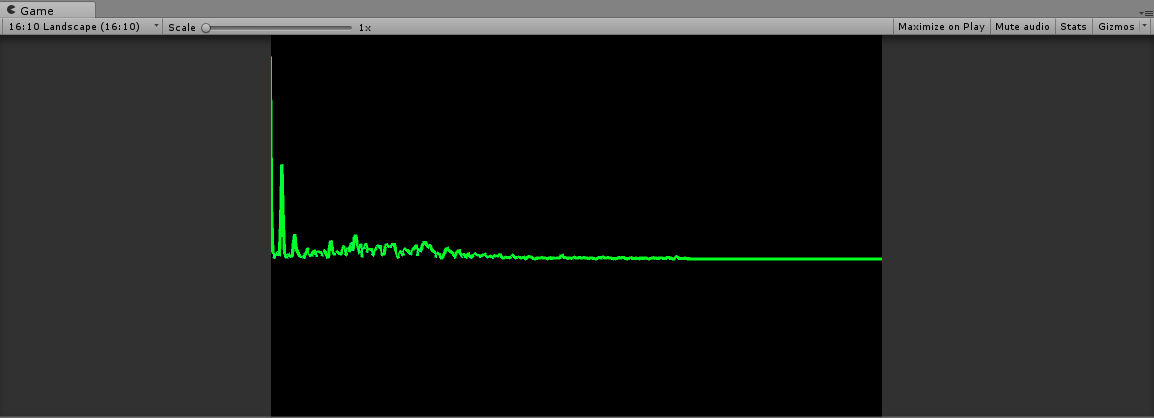

Finally we can close the for loop and finish the Update method. At this point you should be able to plug a sound file into your Audio Source within the unity editor and observe the line moving. If the lines motion isn’t noticeable enough I would recommend either upping the volume on your Audio Source or creating a float within the Synthesizer Effect to multiply the offset, as can be seen within the sample code below.

public class AudioWaveEffect : MonoBehaviour

{

LineRenderer lRender;

AudioSampler aSampler;

[SerializeField]

Vector3 lStart, lEnd;

Vector3[] vArray;

[SerializeField]

[Range(-100f, 100f)]

float gain = 1f;

int length = 0;

void Awake()

{

lRender = GetComponent<LineRenderer>();

}

void Start()

{

aSampler = AudioSampler.Instance;

if (aSampler == null || lRender == null)

{

Destroy(gameObject);

return;

}

length = aSampler.ArrayLength;

LineSetup();

}

internal void LineSetup()

{

float distance = (lStart - lEnd).magnitude / length;

Vector3 lVertex = lStart;

Vector3 offset = (lStart - lEnd).normalized * distance;

Vector3[] lVerts = new Vector3[length];

lRender.SetVertexCount(length);

for (int i = 0; i < length - 1; i++)

{

lVerts[i] = lVertex;

lVertex -= offset;

}

lVerts[length - 1] = lEnd;

lRender.SetPositions(lVerts);

vArray = lVerts;

}

void Update()

{

Vector3 audio = Vector3.zero;

for (int i = 0; i < length; ++i)

{

audio.y = aSampler.GetAudioData(i) * gain;

lRender.SetPosition(i, vArray[i] + audio);

}

}

}

You must be logged in to post a comment.